Após o registo de um domínio e criação de um site, a maioria dos webmasters quer ver o seu site indexado e aparecer nas primeiras posições no Google. Desde que iniciámos o suporte a webmasters de língua Portuguesa em 2006, vimos grande especulação acerca da forma como o Google indexa e avalia os sites. O mercado de língua Portuguesa, ainda numa fase de desenvolvimento em relação a SEO, é um dos maiores geradores de conteúdo na internet, por isso decidimos clarificar algumas das questões mais pertinentes.

Notámos como prática comum entre webmasters de língua Portuguesa a tendência para entrar em esquemas massivos de troca de links e a implementação de páginas única e exclusivamente para este fim, sem terem em consideração a qualidade dos links, a origem ou o impacto que estes terão nos seus sites a longo termo; outros temas populares englobam também uma preocupação excessiva com o PageRank ou a regularidade com que o Google acede aos seus sites.

Geralmente, o nosso conselho para quem pretende criar um site é começar por considerar aquilo que têm para oferecer antes de criar qualquer site ou blog. A receita para um site de sucesso é conteúdo original, onde os utilizadores possam encontrar informação de qualidade e actualizada correspondendo às suas necessidades.

Para clarificar alguns destes temas, compilámos algumas dicas para webmasters de língua Portuguesa:

- Ser considerado autoridade no assunto. Ser experiente num tema guiará de forma natural ao seu site utilizadores que procuram informação especificamente relacionada com o assunto do site. Não se preocupe demasiado com back-links ou PageRank, ambos irão surgir de forma natural acompanhando a importância e relevância do seu site. Se os utilizadores considerarem a sua informação útil e de qualidade, eles voltarão a visitar, recomendarão o seu site a outros utilizadores e criarão links para o mesmo. Isto tem também influência na relevância do seu site para o Google – se é relevante para os utilizadores, certamente será relevante para o Google na mesma proporção.

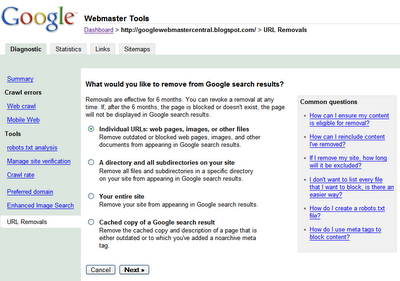

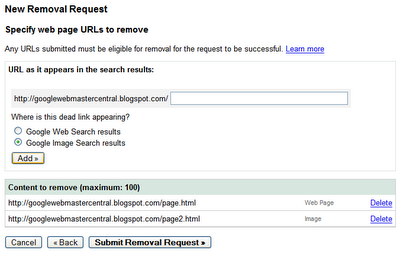

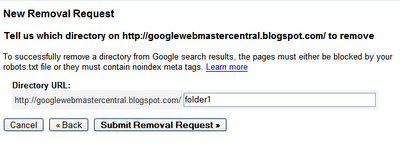

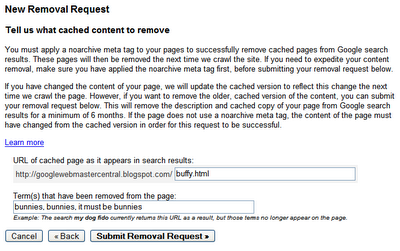

- Submeta o seu conteúdo no Google e mantenha-o actualizado frequentemente. Este é outro ponto chave que influencia a frequência com que o seu site é acedido pelo Google. Se o seu conteúdo não é actualizado ou se o seu site não é relevante, o mais certo é o Google não aceder ao seu site com a mesma frequência que você deseja. Se acha que o Google não acede ao seu site de uma forma constante, talvez isto seja uma dica para que actualize o site mais frequentemente. Além disso na Central do Webmaster o Google disponibiliza as Ferramentas para Webmasters, ferramentas úteis que o ajudarão na indexação.

- Evite puras afiliações. Na América Latina há uma quantidade massiva de sites criados apenas para pura afiliação, tais como as lojas afiliadas do mercadolivre. Não há problema em ser afiliado desde que crie conteúdo original e de qualidade para os utilizadores, um bom exemplo é a inclusão de avaliação e críticas de produtos de forma a ajudar o utilizador na decisão da compra.

- Não entre em esquemas de troca de links. Os esquemas de troca de links ou negócios que prometem aumentar a visibilidade do seu site com o mínimo de esforço, podem levar a um processo de correcção por parte do Google. As nossas Directrizes de Ajuda do Webmaster mencionam claramente esta prática na secção "Directrizes de Qualidade – princípios básicos". Evite entrar neste tipo de esquemas e não crie páginas apenas para troca de links. Tenha em mente que não é o número de links que apontam para o seu site que conta, mas a qualidade e relevância desses links.

- Use o AdSense de forma correcta. Monetizar conteúdo original e de qualidade levará a uma melhor experiência com o AdSense comparado com directórios sem qualquer tipo de qualidade ou conteúdo original. Sites sem qualquer tipo de valor levam os utilizadores a abandoná-los antes mesmo de estes clicarem em qualquer anúncio.

Lembre-se que o processo de indexação e de acesso ao seu site pelo Google engloba muitas variáveis e em muitos casos o seu site não aparecerá no índice tão depressa quanto esperava. Se não está seguro acerca de um problema particular, considere visitar as Directrizes de Ajuda do Webmaster ou peça ajuda na sua comunidade. Na maioria dos casos encontrará a resposta que procura de outros utilizadores mais experientes. Um dos sítios recomendados para começar é o Grupo de Discussão de Ajuda a Webmasters que monitorizamos regularmente.

Getting your site indexed

After registering a domain and creating a website, the next thing almost everybody wants is to get it indexed in Google and rank high. Since we started supporting webmasters in the Portuguese language market in 2006, we saw a growing speculation about how Google indexes and ranks websites. The Portuguese language market is one of the biggest web content generators and it's still in development regarding SEO, so we decided to shed some light into the main debated questions.

We have noticed that it is very popular among Portuguese webmasters to engage in massive link exchange schemes and to build partner pages exclusively for the sake of cross-linking, disregarding the quality of the links, the sources, and the long-term impact it will have on their sites; other popular issues involve an over-concern with PageRank and how often Google crawls their websites.

Generally, our advice is to consider what you have to offer, before you create your own website or blog. The recipe for a good and successful site is unique and original content where users find valuable and updated information corresponding to their needs.

To address some of these concerns, we have compiled some hints for Portuguese webmasters:

- Be an authority on the subject. Being experienced in the subject you are writing about will naturally drive users to your site who search for that specific subject. Don't be too concerned about back-links and PageRank, both will grow naturally as your site becomes a reference. If users find your site useful and of good quality, they will most likely link to your site, return to it and/or recommend your site to other users. This has also an influence on how relevant your site will be to Google — if it's relevant for the users, than it's likely that it is relevant to Google as well.

- Submit your content to Google and update it on a frequent basis. This is another key factor for the frequency with which your site will be crawled. If your content is not frequently updated or if your site is not relevant to the subject, most likely you will not be crawled as often as you would like to be. If you wonder why Google doesn't crawl your sites on a frequent or constant basis, then maybe this is a hint and you should look into updating your site more often. Apart from that in the Webmasters Central we offer Webmaster tools to help you get your site crawled.

- Don't engage in link exchange schemes. Be aware that link exchange programs or deals that promise to boost your site visibility with a minimum effort might entail some corrective action from Google. Our Google Webmasters Guidelines clearly address this issue under "Quality Guidelines – basic principles". Avoid engaging in these kind of schemes and don't build pages specifically for exchanging links. Bear in mind that it is not the number of links you have pointing to your site that matters, but the quality and relevance of those links.

- Avoid pure affiliations. In the Latin America market there is a massive number of sites created just for pure affiliation purposes such as pure mercadolivre catalogs. There is no problem in being an affiliate as long as you create some added value for your users and produce valuable content that a user can't find anywhere else like product reviews and ratings.

- Use AdSense wisely. Monetizing original and valuable content will generate you more revenue from AdSense compared to directories with no added value. Be aware that sites without added value will turn away users from your site before they will ever click on an AdSense ad.

You should bear in mind that the process of indexing and how Google crawls your site includes many variables and in many cases your site won't come up as quickly in the SERPs as you expected. If you are not sure about some particular issue, consider visiting the Google Webmasters Guidelines or seek guidance in your community. In most cases you will get good advice and positive feedback from more experienced users. One of the recommended places to start is the Google discussion group for webmasters (in English) as well as the recently launched Portuguese discussion group for webmasters which we will monitor on a regular basis.