DOWNLOAD OUR MOVIE COLLECTION

Wednesday, August 29, 2012

Site Errors Breakdown

Today we’re announcing more detailed Site Error information in Webmaster Tools. This information is useful when looking for the source of your Site Errors. For example, if your site suffers from server connectivity problems, your server may simply be misconfigured; then again, it could also be completely unavailable! Since each Site Error (DNS, Server Connectivity, and Robots.txt Fetch) is comprised of several unique issues, we’ve broken down each category into more specific errors to provide you with a better analysis of your site’s health.

Site Errors will display statistics for each of your site-wide crawl errors from the past 90 days. In addition, it will show the failure rates for any category-specific errors that have been affecting your site.

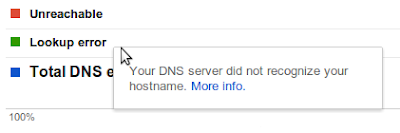

If you’re not sure what a particular error means, you can read a short description of it by hovering over its entry in the legend. You can find more detailed information by following the “More info” link in the tooltip.

We hope that these changes will make Site Errors even more informative and helpful in keeping your site in tip-top shape. If you have any questions or suggestions, please let us know through the Webmaster Tools Help Forum.

Written by Cesar Cuenca and Tiffany Wang, Webmaster Tools Interns

Monday, August 20, 2012

Search Queries Alerts in Webmaster Tools

We know many of you check Webmaster Tools daily (thank you!), but not everybody has the time to monitor the health of their site 24/7. It can be time consuming to analyze all the data and identify the most important issues. To make it a little bit easier we’ve been incorporating alerts into Webmaster Tools. We process the data for your site and try to detect the events that could be most interesting for you. Recently we rolled out alerts for Crawl Errors and today we’re introducing alerts for Search Queries data.

The Search Queries feature in Webmaster Tools shows, among other things, impressions and clicks for your top pages over time. For most sites, these numbers follow regular patterns, so when sudden spikes or drops occur, it can make sense to look into what caused them. Some changes are due to differing demand for your content, other times they may be due to technical issues that need to be resolved, such as broken redirects. For example, a steady stream of clicks which suddenly drops to zero is probably worth investigating.

The alerts look like this:

We’re still working on the sensitivity threshold of the messages and welcome your feedback in our help forums. We hope the new alerts will be useful. Don’t forget to sign up for email forwarding to receive them in your inbox.

Posted by Javier Tordable, Tech Lead, Webmaster Tools

Tuesday, August 14, 2012

Configuring URL Parameters in Webmaster Tools

We recently filmed a video (with slides available) to provide more information about the URL Parameters feature in Webmaster Tools. The URL Parameters feature is designed for webmasters who want to help Google crawl their site more efficiently, and who manage a site with -- you guessed it -- URL parameters! To be eligible for this feature, the URL parameters must be configured in key/value pairs like

item=swedish-fish or category=gummy-candy in the URL http://www.example.com/product.php?item=swedish-fish&category=gummy-candy. Guidance for common cases when configuring URL Parameters. Music in the background masks the ongoing pounding of my neighbor’s construction!

URL Parameter settings are powerful. By telling us how your parameters behave and the recommended action for Googlebot, you can improve your site’s crawl efficiency. On the other hand, if configured incorrectly, you may accidentally recommend that Google ignore important pages, resulting in those pages no longer being available in search results. (There's an example provided in our improved Help Center article.) So please take care when adjusting URL Parameters settings, and be sure that the actions you recommend for Googlebot make sense across your entire site.

Written by Maile Ohye, Developer Programs Tech Lead

Thursday, August 9, 2012

Website testing & Google search

We’ve gotten several questions recently about whether website testing—such as A/B or multivariate testing—affects a site’s performance in search results. We’re glad you’re asking, because we’re glad you’re testing! A/B and multivariate testing are great ways of making sure that what you’re offering really appeals to your users.

Before we dig into the implications for search, a brief primer:

Website testing is when you try out different versions of your website (or a part of your website), and collect data about how users react to each version. You use software to track which version causes users to do-what-you-want-them-to-do most often: which one results in the most purchases, or the most email signups, or whatever you’re testing for. After the test is finished you can update your website to use the “winner” of the test—the most effective content.

A/B testing is when you run a test by creating multiple versions of a page, each with its own URL. When users try to access the original URL, you redirect some of them to each of the variation URLs and then compare users’ behaviour to see which page is most effective.

Multivariate testing is when you use software to change differents parts of your website on the fly. You can test changes to multiple parts of a page—say, the heading, a photo, and the ‘Add to Cart’ button—and the software will show variations of each of these sections to users in different combinations and then statistically analyze which variations are the most effective. Only one URL is involved; the variations are inserted dynamically on the page.

So how does this affect what Googlebot sees on your site? Will serving different content variants change how your site ranks? Below are some guidelines for running an effective test with minimal impact on your site’s search performance.

- No cloaking.

Cloaking—showing one set of content to humans, and a different set to Googlebot—is against our Webmaster Guidelines, whether you’re running a test or not. Make sure that you’re not deciding whether to serve the test, or which content variant to serve, based on user-agent. An example of this would be always serving the original content when you see the user-agent “Googlebot.” Remember that infringing our Guidelines can get your site demoted or removed from Google search results—probably not the desired outcome of your test. - Use rel=“canonical”.

If you’re running an A/B test with multiple URLs, you can use the rel=“canonical” link attribute on all of your alternate URLs to indicate that the original URL is the preferred version. We recommend using rel=“canonical” rather than a noindex meta tag because it more closely matches your intent in this situation. Let’s say you were testing variations of your homepage; you don’t want search engines to not index your homepage, you just want them to understand that all the test URLs are close duplicates or variations on the original URL and should be grouped as such, with the original URL as the canonical. Using noindex rather than rel=“canonical” in such a situation can sometimes have unexpected effects (e.g., if for some reason we choose one of the variant URLs as the canonical, the “original” URL might also get dropped from the index since it would get treated as a duplicate). - Use 302s, not 301s.

If you’re running an A/B test that redirects users from the original URL to a variation URL, use a 302 (temporary) redirect, not a 301 (permanent) redirect. This tells search engines that this redirect is temporary—it will only be in place as long as you’re running the experiment—and that they should keep the original URL in their index rather than replacing it with the target of the redirect (the test page). JavaScript-based redirects are also fine. - Only run the experiment as long as necessary.

The amount of time required for a reliable test will vary depending on factors like your conversion rates, and how much traffic your website gets; a good testing tool should tell you when you’ve gathered enough data to draw a reliable conclusion. Once you’ve concluded the test, you should update your site with the desired content variation(s) and remove all elements of the test as soon as possible, such as alternate URLs or testing scripts and markup. If we discover a site running an experiment for an unnecessarily long time, we may interpret this as an attempt to deceive search engines and take action accordingly. This is especially true if you’re serving one content variant to a large percentage of your users.

To learn more about website testing, check out these articles on Content Experiments, our free testing tool in Google Analytics. You can also ask questions about website testing in the Analytics Help Forum, or about search impact in the Webmaster Help Forum.

Posted by Susan Moskwa, Webmaster Trends Analyst

Wednesday, August 1, 2012

Domain verification using CNAME records

Webmaster Level: all

In order to use Google services like Webmaster Tools and Google Apps you must verify that you own the site or domain. One way you can do this is by creating a DNS TXT record to prove your ownership of the domain. Now you can also use DNS CNAME records to verify ownership of your domains. This is a new domain verification option for users that are not able to create DNS TXT records for their domains.

For example, if you own the domain example.com, you can verify your ownership of the domain by creating a DNS CNAME record as follows.

- Add the domain example.com to your account either in Webmaster Tools or directly on the Verification Home page.

- Select the Domain Name Provider method of verification, then select your domain name provider that manages your DNS records or "Other" if your provider is not on this list.

- Based on your selection you may either see the instructions to set a CNAME record or see a link to the option Add a CNAME record. Follow the instructions to add the specified CNAME record to your domain’s DNS configuration.

- Click the Verify button.

When you click Verify, Google will check for the CNAME record and if everything works you will be added as a verified owner of the domain. Using this method automatically verifies you as the owner of all websites on this domain. For example, when you verify your ownership of example.com, you are automatically verified as an owner of www.example.com as well as subdomains such as blog.example.com.

Sometimes DNS records take a while to make their way across the Internet. If we don't find the record immediately, we'll check for it periodically and when we find the record we'll make you a verified owner. To maintain your verification status don’t remove the record, even after verification succeeds.

If you don’t have access to your DNS configuration at your domain name provider you can continue to use any of the other verification methods, such as the HTML file, the meta tag or Google Analytics tag in order to verify that you own a site.

If you have any questions please let us know via our Webmaster Help forum.

Posted by Pooja Wagh, Software Engineer